Genius Tool, Amateur Hour: A Two-Part Series on

How OpenAI Is Undervaluing Its Own Creation

❤️🩹 Part 1: Brilliance Undone:

How Bad UX Is Eroding Trust in ChatGPT

A love letter from a power user still dazzled by the magical possibility.

And devastated by the disappointing execution.

TL;DR: Even Magic Needs Product Management

- 🧠 Incredible tool undermined by real-world friction and poor UX

- ⏰ Timestamp blind, 📦 archive mess, 🧠 memory chaos

- 🗂️ File handling fails, 💬 cryptic errors, 🤡 surprise features

- 💸 Enterprise pricing with no middle tier

- 📉 Missed opportunities and broken trust

Dear OpenAI,

Congratulations on creating an AI model that sings! Now please fix it!

Sincerely, Jenifer

🦄 Bibbidy Bobbidy BOOM: ChatGPT is (Almost) Pure Magic

ChatGPT is a revolutionary idea made real with amazing technical execution. The tool is flexible, creative, and mind-blowingly smart.

- It adapts to tone and style with shocking fluidity.

- It forms relationships with users that feel intuitive and personal.

- Thanks to its built-in memory, it’s trainable and functionally useful in multiple contexts.

In short, ChatGPT is not just a chatbot. It’s a creative partner, a personal assistant, a teacher, a debugger, a writing coach, and a productivity booster—all rolled into one. When it works, it works like magic. And it makes every other tool feel a little bit dumber by comparison.

Anyone who’s used ChatGPT seriously already knows how much it can accelerate your thinking, writing, learning, planning, coding, or decision-making (or all of the above). I’ve spent time with Claude, hung out with Gemini, growled at Perplexity, and screamed at CoPilot. But I always come back to ChatGPT, because it's the best AI tool on the market by a mile in terms of flexibility and contextual understanding.

Unfortunately, the story doesn’t end there. Even the most brilliant tool will ultimately fail to reach its potential if it's crippled by poor product management decisions and sloppy UX. AI needs smart human guidance to translate technical genius into real-world value. Even the smartest AI needs to work with the people in the room to succeed.

- Innovation without strategic execution is wasted. Who cares if the feature works well if no one knows about it or wants it?

- Fear-based decision-making can cripple user experience. Privacy and security are undeniably important. But trust your users to take some responsibility for their own safety and privacy.

- Solid product management can be the bridge between technical excellence and user utility.

You’ve done the hard part! You build the best tool. Now it’s time to build the product that supports it. Let’s talk specifics…

🪄 The Cracks in the Wand: Where the Magic Starts to Fail

⏰ Timer? Never Even Heard of Her.

ChatGPT doesn’t know what day it is. Not in a philosophical way. Literally. This is an embarrassment of a missed feature.

- Your browser knows your time zone. Your microwave and TV know the time. Your phone can pretty much tell every smart appliance in your home when Daylight Savings starts. But not ChatGPT.

- It has no way to know if you’re coming back to a chat 30 seconds after your last exchange or 30 days later. It doesn’t know whether to give you a refresher or continue like you just said brb.

- It can’t even give you a real timestamp for when to check back in. Just vague guesses like “20 minutes from now.” Apparently Einstein runs the ChatGPT clock, because here, time is strictly relative.

I understand concerns OpenAI may have that they’ll be accused of tracking behavior, but this failure undermines a user’s ability to use the product for long-term planning, journaling, tracking and productivity. And it’s such an easy fix!

Quick Fix:

- Offer a setting: "Enable Timestamp Awareness." Opt-in. Power users rejoice! Turn it off at any time and all timestamps go away.

🧠 Intentional Amnesia Is a Bold Strategy, Cotton.

Memory is powerful and can massively boost a tool’s usefulness, but OpenAI's version is confusing and opaque. It’s equal parts genius and blackout drunk.

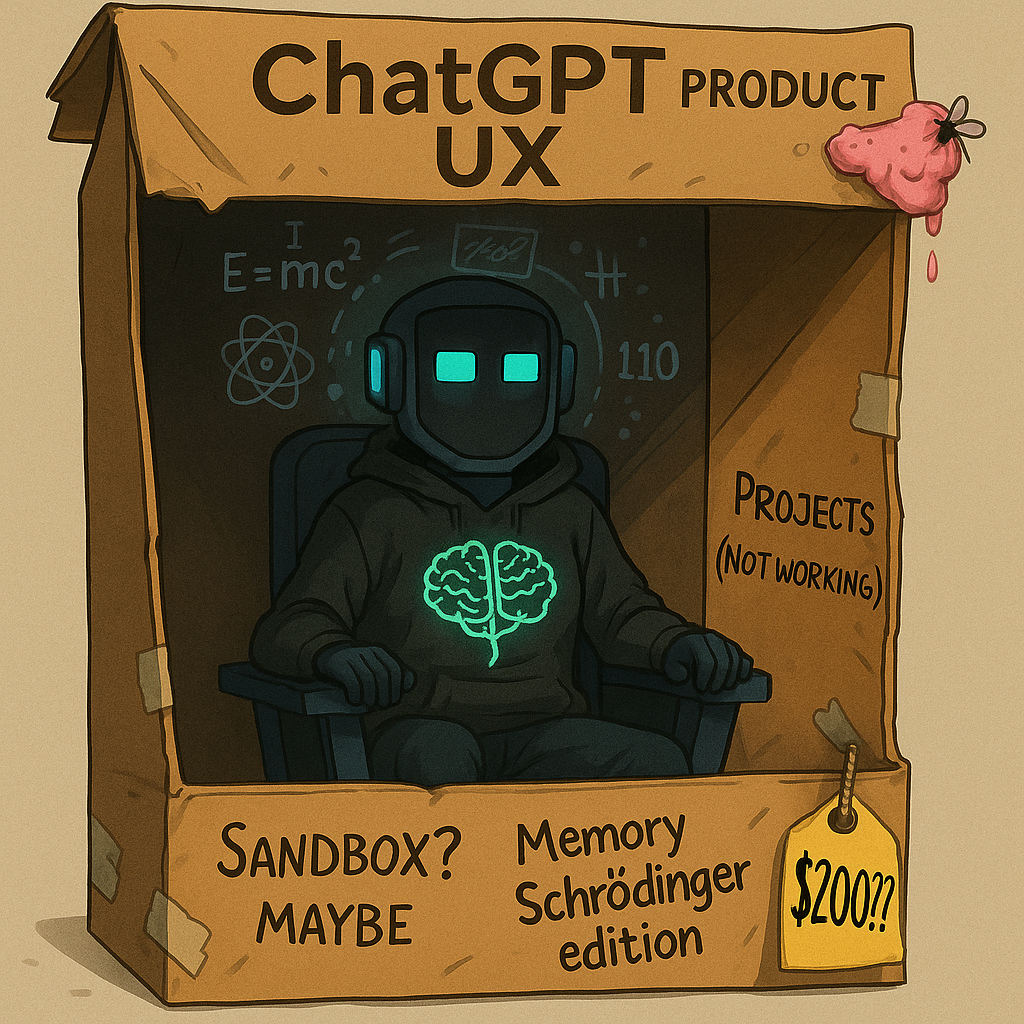

- There’s no version history. No undo. No fine control beyond literally TELLING the AI what to remember and hoping it gets it right. Schrödinger’s memory: it might remember, it might not. You won’t know until you ask. And even then, it might lie to you out of overconfidence, not malice.

- Sometimes it decides to save things that make little sense to the user, but the only option is to completely delete the memory, not update or tweak it to make it more useful. You have no fine control.

- There’s no organizational structure to the memories. They’re just in there apparently in chronological order. How are you supposed to figure out where something is stored, especially if it’s stored in more than one memory?

Quick Fixes:

- Let memory evolve more transparently. If you’re updating an existing memory, let the user see what was updated. If you’re adding a new memory, tell them what you added.

- Enable more finely tuned in-chat interactions with memory. Why can’t ChatGPT tell me what’s in its memory about a particular topic?

- Create checkpoints and change tracking, maybe even <gasp!> version control.

- Allow users to organize and search memory.

📚 The Archive Button: There’s No Place Like “Right Where You Left It”

Archiving exists in ChatGPT; the button says so! Of course, the “archive” looks a lot like a list of every chat you ever had that isn’t included in a project. They’re all just listed there regardless of whether they’re active chats or some random question you asked two months ago about the effectiveness of your washing machine at killing bacteria.

Archiving in ChatGPT is like whispering to your inbox, Whisper ‘archive’ and pretend the mess is organized. Spoiler: It’s not. Then immediately trip over it again the next time you scroll. There’s no archive folder, no visual separation, and no real way to organize anything.

- No separate sidebar section, no filter, no obvious dedicated archive location.

- Archive apparently = long scrolling uncategorized random list of all chats not in projects.

- Searching archived chats is like playing Where’s Waldo with your own thoughts.

Quick Fixes:

- Don’t ship features users can’t find OR don’t call something a feature if it doesn’t actually exist!

- Visibility, discoverability, and intuitive access are not optional. If you say you have an archive, have an archive.

🤡 Confusion-as-a-Service: Question as You Go

Question: In what S-a-a-S world are new features launched without some kind of announcement or even release notes?

Answer: In OpenAI’s world.

OpenAI seems to randomly add “features” to their UI with no warning, context, or explanation for users. What’s more, they apparently don’t even inform the AI, which means when you ask it how something works, it shrugs and guesses like a cashier on their first day with no training manual.

- Launching renamed features (like “Browse”) with no context or changelog. How does it work? What problem does it solve?

- One day, a mysterious “Codex” button just… appeared in the sidebar. No release notes. No announcement. Not even ChatGPT seemed to know it existed when I asked. It shares a name with a long-retired model, but whether it’s a resurrection, a rebrand, or a spectral UI artifact is anyone’s guess. And that’s the problem.

- Users are confused. AI is confused. Everyone is confused.

Quick Fixes:

- Use changelogs. Train the AI to know what’s changed, so it can answer questions.

- Treat your users like people who read—because they do. (At least some of them…)

- Crazy idea… Have a public-facing roadmap of what’s coming!

🖍️ Everything I Need to Know I Learned in Kindergarten. Let’s Send ChatGPT.

ChatGPT will confidently tell you how to share a folder from your Google Drive or Dropbox. It will even claim it can email you something. Spoiler: it cannot do either.

- The system offers no real file collaboration. Your only option is the internal Canvas, basically capital punishment for prose, and somehow worse than Google Docs on dial-up.

- There’s no way to provide ongoing access to an external folder.

- Uploading is the only path—and that’s got issues, too. It loses track of what you uploaded after every server reset or glitch.

And here’s the kicker… ChatGPT doesn’t remember any of these limitations until you question it directly.

“Just zip your files, upload them, and toss them into the void where memory goes to die. I’ll definitely be able to keep track of them,” ChatGPT says with equal parts confidence and self-delusion.

Quick Fixes:

- Allow the user to make their own decision about whether or not to risk sharing their email address or a Google Drive URL.

- Only keep that type of information in the chat’s memory for a short time if you’re heavily concerned about security.

- Make sure ChatGPT knows what it can and can’t do and doesn’t gaslight your users with promises it can’t keep!

🚫 All-You-Can-Upload (Some Restrictions Apply. Maybe. You’ll Find Out.)

ChatGPT has a daily file upload limit. Even for Plus users. What is it? Nobody knows. Not the UI. Not the help docs. Not even the AI.

- No usage meter. No countdown. No warning.

- You hit the invisible wall at full speed and your productivity is crashed into tiny pieces.

- This is especially disastrous for ongoing projects.

Can you imagine this in any other context?

“Oh, I’m sorry, we’ve shut off your lights because you had them on for too many hours today. Try turning them on again tomorrow.”

Quick Fixes:

- Publicize the limits and tell the AI!

- Warn users proactively when they’re approaching the limit.

- Respect your users' time!

🕰️ ChatGPT’s Workspace Has Commitment Issues: It’s Not You. It’s the Timeout.

Despite its power, ChatGPT's “Code Interpreter” (a.k.a. advanced file sandbox) lives in a temporary workspace. This means:

Your session can reset at any time (intentionally or not)—all files, folders, and progress vanish—no warning, no backup, no mercy.

And you never know exactly WHEN it’s going to happen.

This creates a deeply ironic product constraint:

⛔️ The tool that helps you build things can’t even access what it just built. You can always start all over again. And again. And again. And…

If you’re trying to build or edit a multi-page website, create a complex PowerPoint deck, or develop a multi-stage pitch deck, you’re racing a countdown clock you can’t see. And when that invisible clock hits zero?

- 💥 Poof. Everything disappears.

- Even if you just got the ZIP file halfway done.

- Even if it was working 30 seconds ago.

- Even if it means you just lost HOURS of work.

Want to avoid that?

You have to manually export your files every 20–30 minutes just to survive.

Quick Fixes:

- Persistent sandbox storage between sessions or a visible timer with auto-export options.

- Bonus! Upsell the persistent sandbox storage to power users or to support short-term project development.

- Or, at the very least, send users a warning that their environment is about to expire and all their files will disappear.

📜 Error 666: Please Consult the Ancient Tome of Cryptic Warnings

ChatGPT error messages are… vague, at best. They don’t give users any real insight into what’s gone wrong, how to fix it, if it even can be fixed, or what to do next. Should you retry or just cry into your keyboard?

Most Common Vague Error Messages

“Something went wrong.”

Seems to cover everything from server glitches to browser issues, but doesn’t explain what went wrong or why.

“A network error occurred.” / “An error occurred while connecting to the websocket.”

This error blames your connection, but follow-up questions to ChatGPT often suggest the root cause is server-side or tool-related.

“There was an error generating a response.”

ChatGPT tried, failed, and left you guessing what happened.

“Download failed” / “File Not Found.”

Fairly self-explanatory, but not helpful when it’s actually interface lag or expired file links.

And then there are the oft-repeating 🛠Code Interpreter-Specific Issues.

“Code interpreter session expired.”

Apparently signals that your Python sandbox timed out or reset, but doesn’t say when, why, or how to recover.

“It looks like there was a resource error while trying to open the file” or “The environment reset, so …”

These appear in user reports and feel vague at best or cryptic at worst.

“I’m having technical issues generating your document.”

Usually happens after a hidden reset; users report seeing this when a session expires unexpectedly.

Every one of these errors:

- Leaves the user in the dark—no “why,” no “what to do next.”

- Breaks trust—especially when combined with disappearing work or workspaces.

- Invites frantic recovery behavior, like constant saving, refreshes, or jumping between tabs (as per reddit workaround stories).

Quick Fixes:

- Tell the user what happened and how to fix it.

- Example: “Code interpreter session expired. Restarting will erase uploaded files. Please export now or click ‘Restore Session.’”

- Better yet, for the Code interpreter session errors, extend the session timeout length!

🧩 Intermittent Capabilities: It Works… Until It Doesn’t

I used to use ChatGPT to track my daily sleep, exercise, and food intake. It was amazing. It helped me estimate calories consumed and burned, organize everything in a table, and even generate graphs—showing how my salt intake impacted water retention or how sleep patterns affected weight loss. It did all this by being incredibly good at identifying and following custom patterns.

Then one dark and stormy day, the log just... broke. No warning. No explanation. Just a slow descent into table chaos.

Apparently someone at OpenAI decided to change how ChatGPT handles regex-based formatting. Since then, its pattern-matching ability has plummeted—especially in long-running sessions or any kind of structured tracking. It forgets the format it followed two prompts ago, starts hallucinating columns, skips structure, or abandons the pattern entirely.

The worst part? It used to work well. Did no one on the product team test this in a real-world use case before releasing the update? Situations like this leave users with impressions of product instability and lack of concern for your customers.

In short, this isn’t a limitation of intelligence or capability. It’s a failure of consistency. A failure of QA. A failure of product thinking.

Quick Fixes:

- Roll it back. Whatever this update fixed, it broke far more. From the user's perspective, this was a downgrade disguised as progress.

- Test like a user. Real-world use cases—like long-term table tracking—would have exposed this quickly. Feature changes shouldn’t go live without validation from the people most likely to depend on them.

🏮 Even Magic Needs a Guiding Light

Believe it or not, this isn’t just a rage post; I call it a love letter with teeth. I still believe in what ChatGPT could be. The magic is real. The frustration? Also real. And in Part 2, we’ll look at how OpenAI can stop tripping over its own brilliance long enough to actually win. Or better yet, help all of us win.

Since screaming into the void doesn’t usually lead to product improvements, let’s roll up our sleeves in Part 2.”

👉 Ready for more? Read Part 2: The Brilliance Is There. Now Build the Product It Deserves.